Data labelling: The dirty Job of machine learning

What it is, how to do it, and what tools are available

You have a brilliant idea: you can utilize machine learning to create an application that will be extremely beneficial to society.

It will be a hit with everyone.

Wait.

You've had the thought.

The first step in creating a machine learning application is to decide what sort of data we'll need and then look for it in the appropriate application domain.

If we try to forecast shoe size based on a person's eye color, the application is unlikely to be successful.We must use the appropriate data.

Once we've figured out what kind of data we want, we need to obtain or gather it, which frequently leads to another issue if we don't have any pre-built data sets:

Using meteorological data to predict who will win the presidential election is probably not a good idea.

In this post, we'll go through data collection methodologies for specific applications, as well as how to label data that has already been acquired. It will be broken down into the following sections:

- Introduction to Data Labeling — What is data labelling and why do we require it?

- Data labelling best practises —How to Label Data: Tips and Tricks

- Individuals and teams classify data in different ways —Methods for locating labels for your data that are currently available

- Data labelling tools – An overview of the finest data labelling solutions on the market.

If you think you'll appreciate it, sit back, relax, and enjoy it!

Introduction to Data Labeling

If we want to utilise a machine learning-based solution, we must assess the outcomes (predictions) to check if they are of sufficient quality or if they are biassed in any manner.

This results in a highly iterative procedure that includes both initial model training and recurrent re-trainings after models are deployed.

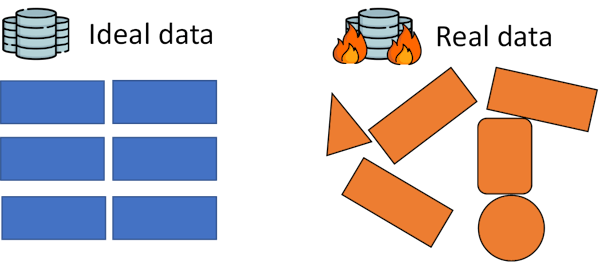

Perfect data, such as that seen in Kaggle contests or data sets from online courses, is rarely found in real-world circumstances. The majority of the time, data is sloppy, incomplete, and unstructured, and it frequently lacks quality labelling.

I had to hand mark roughly 1500 photographs of high voltage towers for another project to determine whether they had any problems or were rusted.

We prepare this data for learning by labelling it so that our data-hungry supervised machine learning models can use it.

These models will learn from what they see, with data labels playing a crucial role in whether our models succeed or fail miserably.

To be honest, data labelling is a tiresome and uninteresting activity most of the time. It is something that no one wants to do. But you have to do it sometimes, so you might as well do it right. Do you want to know how to do it? Then continue reading.

Data labelling best practices

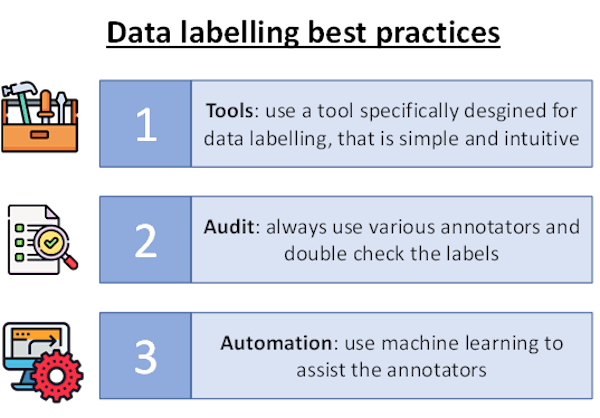

If you ever have to manually label data, there are a few recommended practises you should be aware of in order to achieve the best possible results:

- Always utilise a tool specialised for data labelling if at all possible (we will see the best tools out there later)

- Because data labelling is a repeated job, the tool you choose should be straightforward and intuitive.

- People make mistakes, so if you can, have someone double-check the labelling.

- An audit is required to determine whether the data has been appropriately labelled.

- Consider automated data labelling or semi-supervised learning if you have the expertise: Machine learning comes to the rescue when it comes to machine learning.

Once a machine learning model has been trained, it can greatly aid in the labelling of fresh data.

We shouldn't let the model label all of the data; instead, we should use it to aid our teams in data labelling by providing insights from the model and even allowing it to classify data points for which it has high confidence automatically.

So, now that we've learned some best practises, how do we really go about labelling our data sets? Let's have a look!

Individuals and teams classify data in different ways

- Individuals and organisations use a variety of methods for labelling data in order to fulfil this time-consuming and manual task:

- Labeling the data by hand is a time-consuming process (boring and time consuming)

- Hiring remote teams or specialised organisations in places where labour is cheap, Hiring freelancers to hand label this data or devising a smart alternative, such as web scraping the labels

- Using services such as Amazon Mechanical Turk or Figure8 for crowdsourcing.

Some options may be more acceptable for you than others, depending on your financial and time constraints, but if you're outsourcing the labelling, there are a few things to consider in terms of previous best practises.

If you're hiring remote teams or enlisting the help of another company to label your data, make sure you spell out exactly how you want it done and get a sample of labelled data immediately away to double-check that it's being done correctly.

Do the same for freelancers who use clever/automatic labelling techniques, but place an even greater focus on evaluating the quality and accuracy of the labels. Make sure you're familiar with the procedure they're using.

Many data points are labelled by two or more annotators to ensure that the labels are homogeneous, and you get a nice online interface to accept or reject any labels that you double-check. Platforms like AMT and Figure 8 come with some automation for this process: many data points are labelled by two or more annotators to ensure that the labels are homogeneous, and you get a nice online interface to accept or reject any labels that you double-check.

How to get labelled data

There are a few options for

collecting data that has already been labelled if you don't want to go through

the manual labelling process:

- Surveys: You can design online surveys in which users label your data

by answering the questions.

- Noisy Labeling: This technique involves autonomously

labelling data according to a set of rules. If you wanted to identify tweets

based on their emotion (good or negative), for example, you could collect

tweets with a smiling () face and an angry face () and name those with the

smiley as positive and those with the furious face as negative.

- Kaggle and pre-made data sets: Kaggle, the well-known Data Science contests

website, also provides a data set category where you might be able to

locate data that meets your requirements. There are also numerous data set repositories available online.

- Data Mining: If you need to acquire data for an ad-hoc data set, you can

utilise an indirect data mining technique. For instance, I once worked on

a project that required an intelligent shoe size recommender for several

brands and shoe models. A 42EU Nike size may not fit the same as a 42EU

Adidas size. We intended to take people's foot measurements and create a

model that would suggest a size for a certain brand. We removed the

standard size sheets from the websites of some brands. We engaged a

freelancer to acquire the equivalences for others .Finally, in order to

gather more data and double-check the information we already had, we

created a landing page that suggested visitors' shoe sizes for a specific

brand based on their foot measurements and a few simple principles, but

only if they also provided us their size for another brand. We were able

to collect even more data as a result of this.

Finally, let's have a look at a comprehensive summary of the data labelling tools available, as well as their benefits and drawbacks!

Data labelling tools

If you find yourself in a scenario where you need to manually label a data set, there are a variety of tools available on the market. What criteria do we use to select one?

The tool you use must be appropriate for the labelling task at hand: some are designed exclusively for labelling photos, while others are designed specifically for labelling texts, and so on. The first step is to determine your labelling requirements and select a tool that meets them.

Following that, if you have a budget, you must decide whether to use a paid or free tool. While commercial tools are often superior, there are some excellent free ones available, as we will see in a moment.

Because they were created with a specific goal in mind (assisting people in labelling data), the majority of these tools are basic and nimble. However, they all have their own unique features, and some are more user-friendly than others.

Let's take a look at them!

LabelIing

We'll start with an image annotation tool that can be used to recognise and segment objects. We don't usually utilise a standard tool for image classification tasks (such as determining whether a picture belongs to a dog or a cat), because we only need to register the class in a simple format.

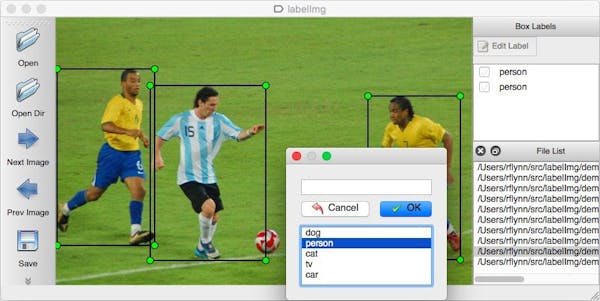

LabelImg is a Python-based graphical image annotation tool. It has a very simple interface that lets users to navigate through a folder of photographs and generate bounding boxes around certain items within the images.

The annotations are saved as XML files, which may be easily converted into a format that most computer vision models, such as Yolov5, can understand. A screenshot of the interface can be seen in the image below.

SuperAnnotate

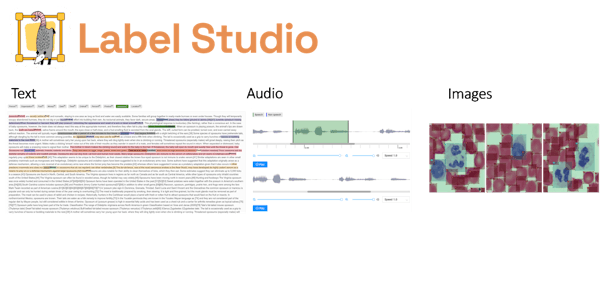

Super Annotate is a data labelling SaaS with an SDK that allows it to be integrated into any app. Like Label Studio is a multi-task labelling application that can be used to label photos, videos, and text, as well as other activities such as data curation, automation, and quality control.

It also includes a marketplace for annotations, similar to what you'd see on Amazon Mechanical Turk (AMT) or Figure 8. Because it is a premium product, we only recommend it if you work for a company that needs to label data on a regular basis.

Clarifai

Amazon Sagemaker

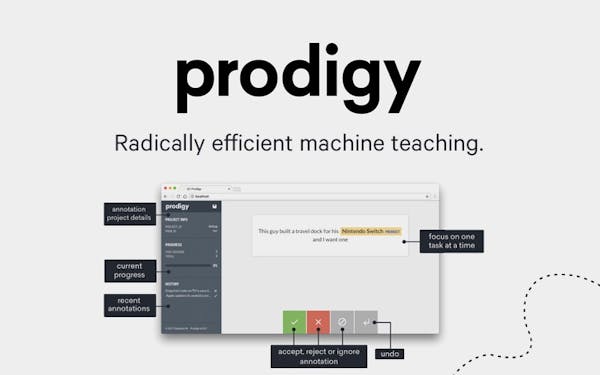

Prodigy

Prodigy is a premium data labelling tool developed by the same people who brought you Spacy, the well-known Python NLP toolkit. It is based on active learning and cutting-edge machine learning and user experience insights The web interface is simple to use and the labels suggested by their tool are ighly accurate and only appear when they are required. It's one of the best annonation tool on the market, but it's also one of the most expensive.

Conclusion

We've looked at what data labelling is, what best practises and tips and tricks are, and a quick summary of the key tools on the market in this article.

I recommend that you test out a few of them, even if it's just for a dummy or made-up work, to familiarise yourself with them and learn more about their capabilities.

Have a good day and take advantage of AI!

- Saleem Raza

- Mar, 28 2022