The popular languages used for data science are:

There are many languages used in the Data Science field including Python, R, SQL, Java, Scala, C++, Javascript, Julia. But the most popular languages among them in this field are Python, R and SQL.

Python:

Python is a powerhouse language. It is an open-source tool. More than 80 per cent of data professionals use python.

Libraries and packages used in the data science field are:

For Visualisation:

Matplotlib -plots and graphs

Seaborn -heatmaps, time series, graphs.

For Algorithms:

Scikit-learn -machine learning: regression, classification

Statsmodels -explore data, estimate statistical models and perform statistical tests.

R:

R is free software, known for its statistical computing and graphical presentation. It integrates with other languages and has 15000 packages in it. It is used for statistical analysis, data analysis, machine learning, data processing and manipulation.

SQL (Structured Query Language):

It is a query language to retrieve, replace, edit, delete data from a database. It is used to communicate with databases.

It is simple and powerful and is used to work with any database which stores large datasets.

CATEGORIES OF DATA SCIENCE TOOLS BASED ON REQUIREMENTS

PROCESS | OPEN SOURCE TOOLS | COMMERCIAL TOOLS | CLOUD-BASED TOOLS |

Data management (the process of persisting and retrieving data) | Relational databases (MySQL,PostgreSQL) NoSQL databases (MongoDB, ApachecouchDB,

Apache Cassandra) File-based (Hadoop) Cloud file systems (Ceph) elasticsearch(store text data,search index) | Oracle,

Microsoft SQL Server,

IBM DB2 | AmazonDynamoDB (NoSQL) Cloudant- ApachecouchDB IBM- DB2 |

Data integration and transformation (ETL process) | Apacheflow,

Kuberflow,

Apache Kafka,

Apachenifi,

Apachesparksql,

node-RED | Informatica PowerCenter,

IBM Infosphere Datastage,

SAP,

Oracle,

SAS,

Talend,

Microsoft Watson Studio Desktop | Informatica,

IBM data refinery (Watson studio) |

Data visualisation(initial data exploration process, as well as being part of a final deliverable) | Hue,

Kibana,

apache superset | Tableau,

Microsoft PowerBI,

IBM Cognos Watson Studio Desktop | Datameer,

IBM Cognos Analysis,

IBM data refinery(Watson Studio) |

Model building(creating a machine learning or deep learning model using algorithms) |

| IBM SPSS modeler,

SAS Enterprise miner,

Watson Studio Desktop | IBM Watson ml,

Google Cloud |

Model deployment(making models available to third party) | apachepredictioIO,

Seldon,

Mleap,

Tensorflow service,

Tensorflow Lite,

Tensorflor.js | SPSS collab as PMML (read- opensoft) | SPSS,

IBM Watson ml |

Model monitoring and assessment(ensures continuous performance quality checks on deployed models) | modelDB,

Prometheus, IBM AI Fairness 360 open sourceTK,

IBM adversarial Robustness 360 Tk,

IBM AI Explainability 360 Tk |

| AWS,

Watson Openscale |

Code asset management(uses versioning and other collab features to facilitate teamwork) | Git(Github,Gitlab,Bitbucket) |

|

|

Data asset management(data governance or data lineage) (replication,backup,right management) | Apache atlas,

kylo | Informatica enterprise,

IBM |

|

Development environments or Integrated Development Environments (IDEs) (implement, execute, test and deploy their work) | Jupyter,

Jupyterlab,

apache zeppelin,

R studio,

spyder | Watson Studio desktop |

|

Execution environment(data preprocessing, model training and deployment) | Apache spark(linear scalability),

apache flink,

riselabRay |

|

|

Fully integrated visual tools(covers all processes) | Kaime,

orange | Watson studio,

H2o,

driverless ai | Microsoft azure ml |

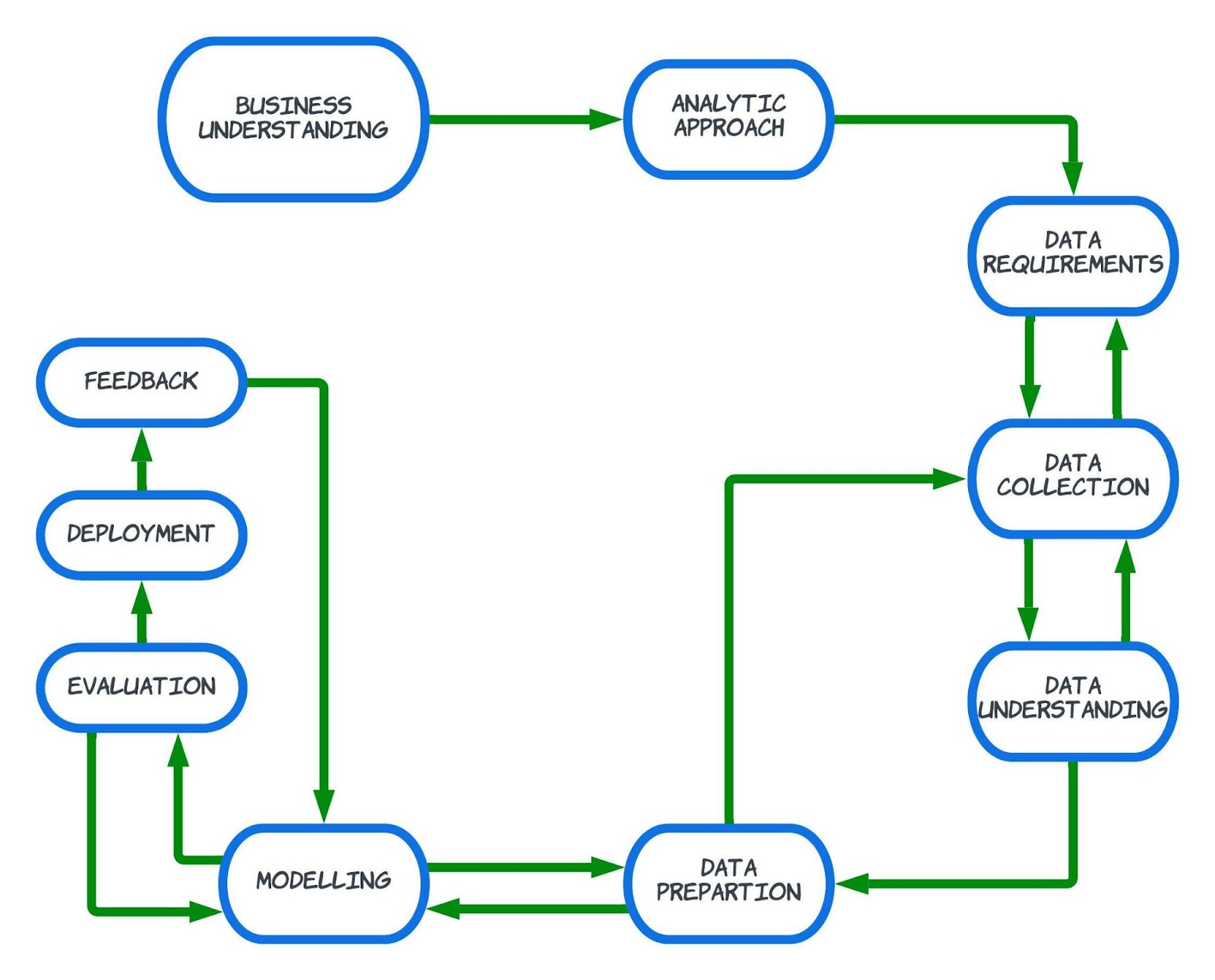

Data Science Methodology:

Business understanding

Business understanding

The first and most important part of the data science methodology is the understanding of the goal, the problem that needs to be solved in a way that benefits the client’s goal. So, a better understanding will help in a better approach to the problem.

Analytical approach

After understanding the problem, the data that is required is decided and the analytical approach to bring insights and solve the problem is analysed and based on that the required data is decided.

Data requirementsThe required data is decided based on identifying the factors that have an impact on the outcome and based on the analytical approach.

Data collection

Data is collected from the database or APIs or any other source that is provided or sometimes data will be collected from the internet, web scraping and the data might be structured and unstructured. Based on the available data, the data required will be discussed if extra data is required or if data is unavailable, the alternative data requirements will also be decided.

Data understanding

The data that is collected should be understood by exploratory analysis. These processes are iterative and they are done again if necessary to make sure the data is completely available and ready for bringing insights.

Data preparation

The most time-consuming part is the data preparation part, where data is cleaned, transformed, the missing values(if not necessary, some data can be removed and if necessary the data are tried to be collected again), and outliers are worked on. The data is prepared for modelling purposes.

Models are prepared by training them with the dataset and testing them with the dataset. the dataset can be separated into training and testing datasets (which is more effective) or the same whole dataset can be used for both training and testing(which is less effective).

Evaluation

Evaluation is done on the model to check if the accuracy of the predictions is on good levels. hence after evaluation if accuracy is low, the modelling is repeated with more corrections.

Deployment

After evaluation, the models are sent to the third party, and it is monitored if they are working properly by ensuring continuous performance quality checks on deployed models.

Feedback

Feedback on the model's predictions or insights will be given so that if there are more requirements on predictions and if there are any new insights that need to be predicted, the modelling process is repeated until the feedback on the model is satisfactory.

These are the data science methodology and the tools used for each purpose.