Decision Tree Algorithm with Python Implementation

DEFINITION

A decision tree is a flowchart-like structure in which each internal node represents a "test" on an attribute, each branch represents the outcome of the test, and each leaf node represents a class label and branches represent conjunctions of features that lead to those class labels.

The paths from root to leaf represent classification rules.

A decision tree consists of three types of nodes:

- Decision nodes – typically represented by squares

- Chance nodes – typically represented by circles

- End nodes – typically represented by triangles

- Programmatically, Decision Tree is nothing but giant structure of If Else.

- Mathematically, Decision Tree use hyperplanes which run parallel to any of the axes to cut your co-ordinate system into hyper-cuboids.

- Decision Trees are a non-parametric supervised learning method used for both classification and regression tasks.

- Tree models where the target variable can take a discrete set of values are called classification trees. Decision trees where the target variable can take continuous

values are called regression trees. Classification And Regression Tree (CART) is general term for this.

ENTROPY (MEASURE OF DISORDER IN THE SYSTEM)

Definition: It is a commonly used concept in Information Theory and is a measure of ‘purity’ of an arbitrary collection of information.

Mathematical Definition:

Here , given a collection S , containing +ve and -ve examples, the Entropy of S is given by the above equation, where p represents probability of each class.

Generalized equation of the Entropy is :

c = Total number of unique classes in target variable

- E(S)= 0 if all the data points in the target variable belongs to the single class only.

- E(S) = 1 equal proportion of data points from all classes in target variable.

- E(S) ---> close to 1 infers that there is approx. equal proportion of data distribution within classes yet there is slight difference.

- Value of Entropy lies between 0 to 1 for Binary classification problem , if number of classes is more than 2 than maximum value can be more than 1.

INFORMATION GAIN

- Information gain is used to decide which feature to split on at each step in building the tree.

- Simplicity is best, so we want to keep our tree small. To do so, at each step we should choose the split that results in the purest daughter nodes.

- A commonly used measure of purity is called information gain. For each node of the tree, the information value measures how much information a feature gives us about the class.

- The split with the highest information gain will be taken as the first split and the process will continue until all children nodes are pure, or until the information gain is 0.

MATHEMATICAL FORMULA FOR INFORMATION GAIN

Here, the Gain(S,A) is the information gain of an attribute A relative to a sample S. The Values(A) is set of all possible values for the attribute A. Entropy(S) denotes entropy of the entire dataset.

GINI IMPURITY

- Gini Impurity is a measurement of the likelihood of an incorrect classification of a new instance of a random variable, if that new instance were randomly classified according to the distribution of class labels from the data set.

- If our dataset is Pure then likelihood of incorrect classification is 0. If our sample is mixture of different classes then likelihood of incorrect classification will be high

- Gini Impurity is computationally less expensive than Entropy.

STEPS FOR MAKING DECISION TREE

- Get list of rows (dataset) which are taken into consideration for making decision tree(recursively at each nodes).

- Calculate uncertainty of our dataset or Gini impurity or how much our data is mixed up etc.

- Generate list of all question which needs to be asked at that node.

- Partition rows into True rows and False rows based on each question asked.

- Calculate information gain based on Gini Impurity and partition of data from previous step.

- Update highest information gain based on each question asked.

- Update best question based on information gain (higher information gain).

- Divide the node on best question. Repeat again from step 1 again until we get pure node (leaf nodes).

HOW TO AVOID OVERFITTING THE DECISION TREE MODEL ?

- To avoid decision tree from overfitting we remove the branches that make use of features having low importance. This method is called as Pruning or post-pruning. This way we will reduce the complexity of tree, and hence improves predictive accuracy by the reduction of overfitting.

- Pruning should reduce the size of a learning tree without reducing predictive accuracy as measured by a cross-validation set.

THERE ARE 2 MAJOR PRUNING TECHNIQUES:

• Minimum Error: The tree is pruned back to the point where the cross-validated error is a minimum or the cross validation accuracy is maximum.

• Smallest Tree: The tree is pruned back slightly further than the minimum error. Technically the pruning creates a decision tree with cross-validation error within 1 standard error of the minimum error.

EARLY STOP OR PRE-PRUNING

- An alternative method to prevent overfitting is to try and stop the tree-building process early, before it produces leaves with very small samples. This heuristic is known as early stopping but is also sometimes known as pre-pruning decision trees.

- At each stage of splitting the tree, we check the cross-validation error. If the error does not decrease significantly enough then we stop. Early stopping may underfit by

stopping too early. The current split may be of little benefit, but having made it, subsequent splits more significantly reduce the error.

MAJOR PARAMETERS TO TUNE IN DECISION TREE REGRESSOR

• Max_depth

• Max_features

• Max_leaf_nodes

• Min_impurity_decrease

• Min_impurity_split

• Min_samples_leaf

• Min_samples_split

Overfitting and underfitting depends on the max_depth of the tree , Higher value of max_depth causes Overfitting, Lower value of max_depth causes Underfitting.

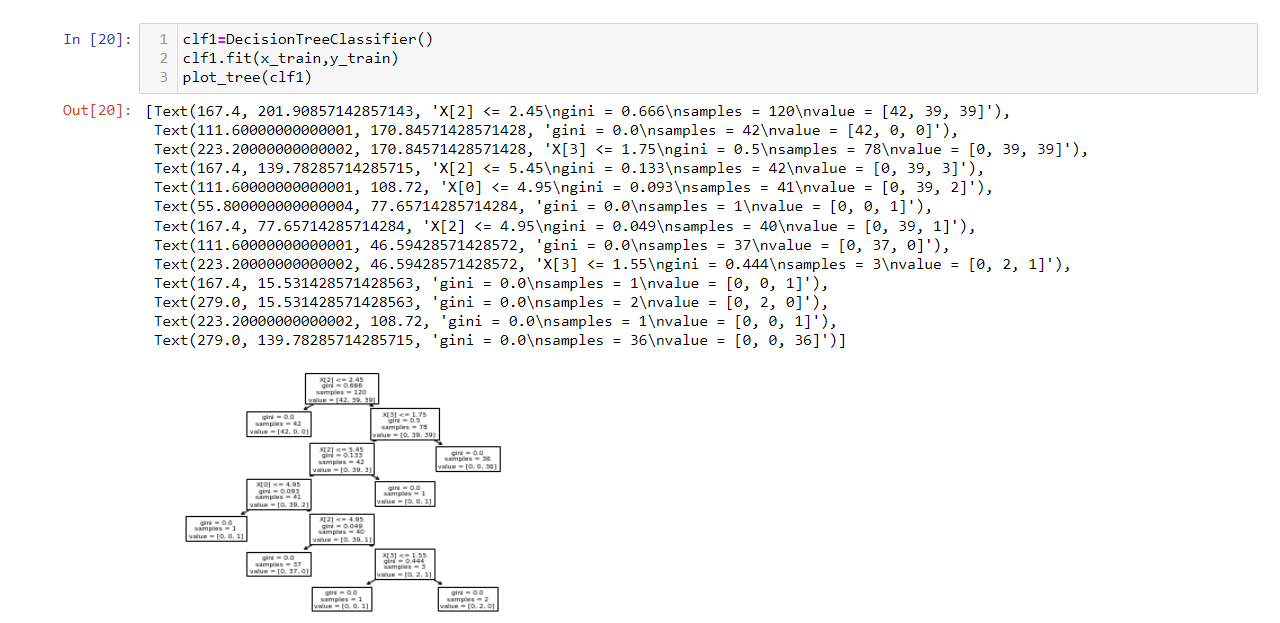

Criterion can be Gini or Entropy , splitter can be best or random, random reduces overfitting.

ASSUMPTIONS OF DECISION TREE MODEL

- In the beginning, the whole training set is considered as the root.

- Feature values are preferred to be categorical. If the values are continuous then they are discretized prior to building the model.

- Records are distributed recursively on the basis of attribute values.

- Order to placing attributes as root or internal node of the tree is done by using some statistical approach.

ADVANTAGES OF DECISION TREE

- Easy to use and understand.

- Can handle both categorical and numerical data.

- Resistant to outliers, hence require little data preprocessing.

- Really effective for big datasets (>104 data points).

DISADVANTAGES OF DECISION TREE

- Unstable Nature

- Prone to overfitting.

- Require some kind of measurement as to how well they are doing.

- Need to be careful with parameter tuning.

- Can create biased learned trees if some classes dominate.

Decision Tree Python Implementation:

- Ankit Yadav

- Dec, 27 2022