Distracted Drivers Detection Computer-Vision Project

Distracted Drivers Detection Computer-Vision Project

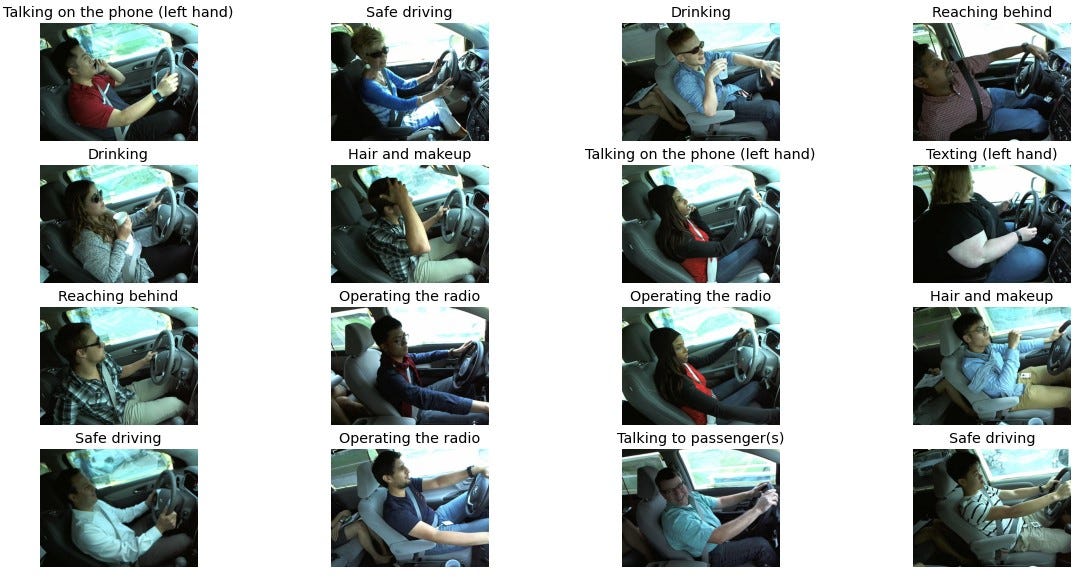

Focuses on driver distraction activities detection via images, which is useful for vehicle accident precaution. I built a high-accuracy classifiers to distinguish whether drivers is driving safely or experiencing a type of distraction activity then deploy the model as Android Application.

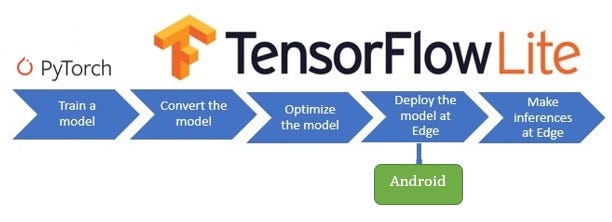

Introduction: the project was a very beneficial and challenging project as after the training with the ResNet model from Pytorch we decided to be deployed as Android App. So, we convert the Pytorch weights to TensorFlow Lite then deploy the model on Heurko and Docker as The objective of our project was driver distraction activities detection via images.

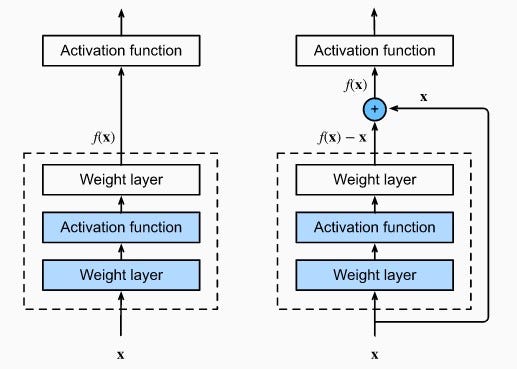

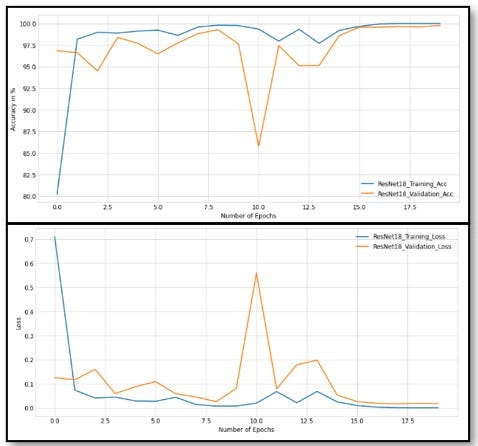

Approach: We used transfer learning on pre-trained (ImageNet) convolutional models (ResNet) from the Pytorch library. ResNet is, short for Residual Networks is a classic neural network used as a backbone for many computer vision tasks. This model was the winner of the ImageNet challenge in 2015. The fundamental breakthrough with ResNet was it allowed us to train extremely deep neural networks with 150+layers successfully. We used ResNet18 and achieves 100 Training Accuracy, 99.7 Validation Accuracy, and 99.5 Test Accuracy.

Technology: We have used Pytorch for training and fine-tuning, ONNX and TensorFlow Lite for converting the Pytorch model weights from .pth to tflite (As recommended in the deployment to use tflite), Flask, JavaScript, HTML, and CSS as the backend and frontend for the web application, Docker Image as an executable package that includes everything needed to run an application (code, runtime, system tools, system libraries, and settings), Heroku for web deployment and finally Cordova Platform for the android application.

Pipeline: starts with Data Collection, Preprocessing Data, Creating Data loader (Split), Load pre-trained model (ResNet — 18), Fine Tuning (not Freezing and take last weight to start with and change last layers with my classes), Evaluation (Confusion Matrix), make pip package and lastly building a Web site and android Application App.

Required Imports

# Basic Imports

import numpy as np

import pandas as pd

from math import exp

import numpy.random as nr

import os, random, time,copy, re, glob# Pytorch Imports

import torch, torchvision

from torch import nn, optim

from torch.functional import F

from torch.autograd import Variable

from torch.utils.data import DataLoader, Dataset

from torchvision import models, transforms, datasets# Tensorflow Imports

import tensorflow as tf

from tensorflow.keras.preprocessing import image# Images and Plt Imports

from PIL import Image

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

from IPython.display import display

Training and Evaluation Functions:

Training Function

def epoch_time(start_time, end_time):

elapsed_time = end_time - start_time

elapsed_mins = int(elapsed_time / 60)

elapsed_secs = int(elapsed_time - (elapsed_mins * 60))

return elapsed_mins, elapsed_secsdef calculate_accuracy(y_pred, y):

top_pred = y_pred.argmax(1, keepdim = True)

correct = top_pred.eq(y.view_as(top_pred)).sum()

acc = correct.float() / y.shape[0]

return accdef train(model, iterator, optimizer, criterion):

epoch_loss = 0

epoch_acc = 0

model.train()

for (x, y) in iterator:

#variables can already sort of do that by setting the requires_grad=False

x = Variable(torch.FloatTensor(np.array(x))).to(device)

y = Variable(torch.LongTensor(y)).to(device)

optimizer.zero_grad() #to clear the gradients from the previous training step

y_pred = model(x)

loss = criterion(y_pred, y)

acc = calculate_accuracy(y_pred, y)

loss.backward()

#makes the optimizer iterate over all parameters (tensors)

#it is supposed to update and use their internally stored grad to update their values

optimizer.step()

epoch_loss += loss.item()

epoch_acc += acc.item()

return epoch_loss / len(iterator), epoch_acc / len(iterator)

Evaluate Function:

def evaluate(model, iterator, criterion):

epoch_loss = 0

epoch_acc = 0

model.eval()

with torch.no_grad():

for (x, y) in iterator: #variables can already sort of do that by setting the requires_grad=False

x = Variable(torch.FloatTensor(np.array(x))).to(device)

y = Variable(torch.LongTensor(y)).to(device)

y_pred = model(x)loss = criterion(y_pred, y)acc = calculate_accuracy(y_pred, y)epoch_loss += loss.item()

epoch_acc += acc.item()

return epoch_loss / len(iterator), epoch_acc / len(iterator)

Fit Model Function

def fit_model(model, model_name, train_iterator, valid_iterator, optimizer, loss_criterion, epochs):

""" Fits a dataset to model"""

best_valid_loss = float('inf')

train_losses = []

valid_losses = []

train_accs = []

valid_accs = []

for epoch in range(epochs):

start_time = time.time()

train_loss, train_acc = train(model, train_iterator, optimizer, loss_criterion, device)

valid_loss, valid_acc = evaluate(model, valid_iterator, loss_criterion, device)

train_losses.append(train_loss)

valid_losses.append(valid_loss)

train_accs.append(train_acc*100)

valid_accs.append(valid_acc*100)

if valid_loss < best_valid_loss:

best_valid_loss = valid_loss

torch.save(model.state_dict(), f'{model_name}.pt')

end_time = time.time()epoch_mins, epoch_secs = epoch_time(start_time, end_time)

print(f'Epoch: {epoch+1:02} | Epoch Time: {epoch_mins}m {epoch_secs}s')

print(f'\tTrain Loss: {train_loss:.3f} | Train Acc: {train_acc*100:.2f}%')

print(f'\t Val. Loss: {valid_loss:.3f} | Val. Acc: {valid_acc*100:.2f}%')

return pd.DataFrame({f'{model_name}_Training_Loss':train_losses,

f'{model_name}_Training_Acc':train_accs,

f'{model_name}_Validation_Loss':valid_losses,

f'{model_name}_Validation_Acc':valid_accs})

Plot Statistics Function:

def plot_training_statistics(train_stats, model_name):

fig, axes = plt.subplots(2, figsize=(15,15))

axes[0].plot(train_stats[f'{model_name}_Training_Loss'], label=f'{model_name}_Training_Loss')

axes[0].plot(train_stats[f'{model_name}_Validation_Loss'], label=f'{model_name}_Validation_Loss')

axes[1].plot(train_stats[f'{model_name}_Training_Acc'], label=f'{model_name}_Training_Acc')

axes[1].plot(train_stats[f'{model_name}_Validation_Acc'], label=f'{model_name}_Validation_Acc')

axes[0].set_xlabel("Number of Epochs"), axes[0].set_ylabel("Loss")

axes[1].set_xlabel("Number of Epochs"), axes[1].set_ylabel("Accuracy in %")

axes[0].legend(), axes[1].legend()

To use our Pip Package:

1. Install Our Package

pip install Distracted-Driver-Detection2. Download the Finetunned Model Weights and Test Imagesimport gdown

PytorchURL = 'https://drive.google.com/uc?id=1P9r7pCc-5eTmW4krT4GZ1F6w_miTtxJA'

TfLiteURL = 'https://drive.google.com/uc?id=1WbZD6PMETHIH6oMj0bzyG3BoDUlyO2Ll'

TestImagesURL = 'https://drive.google.com/uc?id=1sodvME9eXHuZ-4qjTxmxsLsfFsg99KpK'

PytorchModel = 'model_ft.pth'

TfLiteModel = 'model.tflite'

TestImages = 'test_imgsN.zip'

gdown.download(PytorchURL, PytorchModel, quiet=False)

gdown.download(TfLiteURL, TfLiteModel, quiet=False)

gdown.download(TestImagesURL, TestImages, quiet=False)3. Import the DistractedDriverDetection_Utils from distracted_driver_detection :from distracted_driver_detection import DistractedDriverDetection_Utils

import matplotlib.pyplot as plt

import matplotlib.image as mpimg4. Detect The Distraction Class for the Driver Using Pytorch Weights:# Run the Below Function by Input your image Path to get the outPut class and probability for the driver distraction class then show it

class_,pro = DistractedDriverDetection_Utils.PredictClass(imgPath)

print(class_,pro)

plt.imshow(mpimg.imread(imgPath));

# Plot Batch of Test Images from directory with Detection

DistractedDriverDetection_Utils.predMulti_images(test_img_dir,nImages=4)5. Detect The Distraction Class for the Driver Using Tensorflow Lite Weights:# Run the Below Function by Input your image Path to get the outPut class and probability for the driver distraction class then show it

class_,pro = DistractedDriverDetection_Utils.tfliteModel_Prediction(imgPath)

print(class_,pro)

plt.imshow(mpimg.imread(imgPath));

# Plot Batch of Test Images from directory with Detection

DistractedDriverDetection_Utils.tfliteModel_Plot(test_img_dir,nImages=4)

Model Output:

Links:

This project is part of my internship as Computer Vision Developer at Technocolabs Softwares and it was really a great experience working with the team. Facing many challenges during the project increased my experience and my knowledge in computer vision and TensorFlow. I would like to thank Yasin Shah and Adebayo Abdulganiyu keji for the great guidance, wonderful ideas, and all the support and solutions.

I would also like to thank my team for their strong effort and cooperation as I believe that with team spirit we can achieve a lot more.

Abdullah Abdelhakeem

Mahmoud Salama

- Mohamed Sebaie

- Mar, 25 2022