Do you know what are skewed classes?

Skewed Classes

it’s a tricky situation appears in classification problems when we have imbalanced dataset, which means there is one class is over represented in the dataset.

Let us explain on a cancer detection problem: -

Assume that we have a dataset with 80% of its labels is "yes" , in this case, if the model always predicts “yes”, then it is correct at 80% of the time.

At first, the high accuracy of such a model might seem surprisingly good and an indicator of a well implemented model, but once you perform some analysis and dig a little bit deeper, it becomes obvious that the model is completely useless.

So, in this case, the error rate or

accuracy aren’t good indicators of how good or bad our model is, instead we

are going to use another metrics called precision and recall.

Precision & Recall

The metrics precision and recall can help us with determining the quality of the model especially when it is suffering from skewed classes situation.

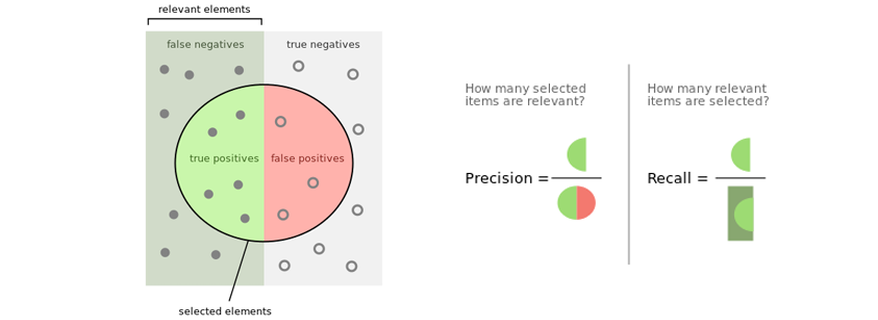

Precision

is: How many selected items are relevant?

Recall

is: How many relevant items are selected?

Trade-off between Precision & Recall

Your model can’t have both precision and recall high. If you increase precision, it will reduce recall and vice versa. This is called the precision/recall tradeoff.

Back to the cancer detection problem by using logistic regression:

Predict y = 1 ,if h(x) => threshold

Predict y = 0 , if h(x) < threshold

Suppose that we

want to predict y = 1 only if very confident => it means increasing the threshold which leads to higher precision, lower recall.

Or suppose that we want to avoid missing too many cases of cancer => it means decreasing the threshold

which leads to higher recall, lower precision.

So, classifier performs differently by changing the threshold value.

How to compare Precision and Recall values ?

If we have a model with recall = 0.5, precision = 0.4, and another model with recall = 0.1, precision = 0.7. Which model is better?

Its

important to convert these two metrics to one numerical metric to compare with, which is called F1 Score.

F1 Score = ( 2* P * R ) / ( P + R ).

- Omnia Fares

- Mar, 27 2022