What is Dimensionality Reduction?

While working on various Data Science problem statements & use cases, we often tend to gather data, and in that process we end up gathering a large set of features. Some of the features are not important as others, and sometimes various features are correlated with each other, and we end up over-fitting the problems by introducing too many features in the problem.

When we deal with multiple features/variables, it takes a much larger space to store data, and ultimately, it can be very difficult to analyze and visualize the dataset with higher number of dimensions.

Hence, there are different ways to cut down the features/dimensions, which reduces the time taken to build a model and helps us overcome the over-fitting situation.

How to define Dimensionality Reduction?

Dimensionality Reduction compresses large set of features/variables (n) onto a new feature subspace of lower dimensions (k), where k < n, without losing the important information. However, in such techniques, we tend to lose some of the information when the dimensions are reduced (not much information though). Visualizing dataset with large number of dimensions is nearly impossible. Let's take an example: Let say, we have one variable i.e. X1, the points are as below: 0, 1, 2, 3 We can easily plot them on a 1-D line, and it should look like below (Try ignoring the y-axis, and imagine the below graph as a simple line i.e. x-axis)

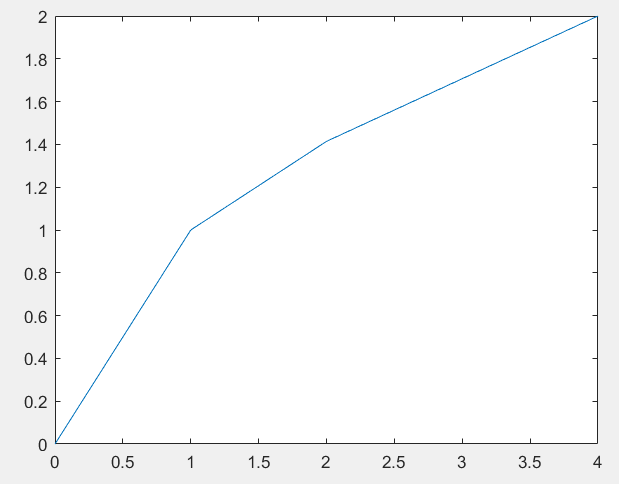

Now, let say, we have two variables, X1 & X2, the co-ordinates are: (0,0), (1,1), (2,1.4), (3,1.6) & (4,2), the below graph depicts the X1 & X2 projected on a 2-D graph.

Now, let say, we have two variables, X1 & X2, the co-ordinates are: (0,0), (1,1), (2,1.4), (3,1.6) & (4,2), the below graph depicts the X1 & X2 projected on a 2-D graph.

Now if we add another co-ordinate i.e. X3, it is still possible to visualize by introducing another co-ordinate in the graph (Try to visualize the above graph with an axis towards you, that axis towards you makes the X3 axis, and similarly we can still plot data with 3 variables). What if we have more than 3 variables, can we still plot it?

Now if we add another co-ordinate i.e. X3, it is still possible to visualize by introducing another co-ordinate in the graph (Try to visualize the above graph with an axis towards you, that axis towards you makes the X3 axis, and similarly we can still plot data with 3 variables). What if we have more than 3 variables, can we still plot it?

The simple answer is NO!!

It is against the law of nature to visualize a data onto a n-Dimensional space (where n > 3).

Different Dimensionality Reduction Techniques?

There are various feature selection & extraction techniques which ultimately helps in reducing dimensions. In this article, we shall be discussing about the common 4 dimensionality techniques, otherwise known as the feature extraction techniques:

Principal Component Analysis (PCA):

Principal Component Analysis (PCA) is basically a dimensionality reduction algorithm, but it can also be useful as a tool for visualization, noise filtering, feature extraction & engineering, and much more. Sci-kit learn library offers a powerful PCA component classifier. This code snippet illustrates how to create PCA components:

from sklearn.decomposition import PCA pca_classifier = PCA(n_components=2) my_pca_components = pca_classifier.fit_transform(X_train)

Watch out the below videos to get a fair idea about PCA. https://www.youtube.com/watch?v=gnFh__0Rw70&t=3s Let's understand the math's behind PCA in this video: https://www.youtube.com/watch?v=SiaPnnVE5UY

Linear Discriminant Analysis (LDA):

LDA is like PCA but it focuses on maximizing separability among known categories LDA differs from PCA because:

- Apart from finding the component axis, with LDA we are interested in axis that maximizes the separation between multiple classes

- LDA is Supervised because it relates to the y-variable (dependent)

Sci-kit learn library offers a powerful LinearDiscriminantAnalysis component classifier. This code snippet illustrates how to create LDA components:

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis lda_classifier = LinearDiscriminantAnalysis(n_components=2) my_lda_components = lda_classifier.fit_transform(X_train, y_train)

Looking at the code, there's not much of difference between PCA & LDA, however while performing the fit_transform, apart from the X variables, we need to pass the y component as well, because LDA also checks class behavior and focuses on maximizing separability among known categories. Watch out the below video to get a fair idea about LDA. https://www.youtube.com/watch?v=8ILJ1rMBJAM&t=16s

Kernel PCA (k-PCA):

Kernel PCA is used for non-linearly separable class problems. The main idea is we know that PCA works well for linearly separable classes/datasets, but what about the non-linearly separable datasets? As most of our real-world problems/data are not linearly separable, we might not achieve what we need to achieve using LDA or PCA, as they are meant for linear transformations. Hence, Kernel PCA came into the picture, and Kernel PCA basically uses a kernel function to project the dataset into a higher dimensional space, where it is linearly separable. Sci-kit learn library offers a powerful KernelPCA component classifier. This code snippet illustrates how to create KPCA components:

from sklearn.decomposition import KernelPCA kpca_classifier = KernelPCA(n_components=2) my_kpca_components = kpca_classifier.fit_transform(X_train, y_train)

Watch the below video to understand about Kernel PCA & QDA. [embed]https://www.youtube.com/watch?v=ispBXqC34Ak[/embed]

Quadratic Discriminant Analysis (QDA):

Linear Discriminant Analysis (LinearDiscriminantAnalysis) and Quadratic Discriminant Analysis (QuadraticDiscriminantAnalysis) are two classic classifiers, with, as their names suggest, a linear and a quadratic decision surface, respectively. These classifiers are attractive because they have closed-form solutions that can be easily computed, are inherently multi-class, have proven to work well in practice, and have no hyperparameters to tune.

The plot shows decision boundaries for Linear Discriminant Analysis and Quadratic Discriminant Analysis. The bottom row demonstrates that Linear Discriminant Analysis can only learn linear boundaries, while Quadratic Discriminant Analysis can learn quadratic boundaries and is therefore more flexible. Entire Dimensionality Reduction Code to be uploaded soon If you are new to Python, please go through this Python Tutorial : https://www.youtube.com/watch?v=p3l_NXm-4r8&list=PLymcv5WXEpKh6uK1ak5-ReqTluWdSEK2p I hope this document provides you the basic to intermediate level of idea about Dimensionality Reduction techniques, keep exploring our posts, cheers!!

- Zep Analytics

- Mar, 06 2022